Yves Jacquier has been at Ubisoft since 2004, serving a wide variety of roles. He has been leading the company’s efforts with artificial intelligence since 2011 and in 2016 founded the La Forge, which is “a prototyping space where ideas on technology, originating from a collaboration between university research and production teams, are brought to life.” Jacquier has a PhD in particle physics from Ecole Centrale Paris and was a research engineer at CERN (Conseil Européen de la Recherche Nucléaire). At the 2019 D.I.C.E. Summit , Jacquier will be discussing the game-changing impact of artificial intelligence.

AI has long been used in game development to determine non-player character behavior. Since AI has greatly advanced in the last decade, what areas of development do see AI usage becoming more helpful and common?

First, I'd like to set some definitions. There's AI, which is of course artificial intelligence in general. It covers many, many techniques. It's a domain that's over 70-years old. It started with Turing and others in the 1940s. When we're talking about recent developments in AI, we're talking specifically about machine learning. It's a very specific form of artificial intelligence which uses tons of data to find and generalize underlying pattern in it, and is then able to use those generalizations to make predictions on new data.

Machine learning was used in the 1980s by the US Postal Service to recognizes handwritten characters, as an example. These days, tools and computing power have advanced so much that machine learning can be used to accomplish more complicated tasks -- image recognition, speech recognition, translation, and etcetera.

Once you understand that machine learning uses data, then you can guess how it can be used with the tons of specific data we have in the gaming industry. We have histories of animation data, texture data, and etcetera. The biggest benefits of machine learning can be witnessed for creation assistance-- the creation of animation, text-to-speech, and etcetera.

So we have data, but we also have game engines. You can look at our game engines as simulations of the real world. They can be used as the first stage of real-life AI developments. Let's say you want to train an autonomous vehicle, whether it's a car or a drone. You can use a simulation before testing actual vehicles in a closed circuit. The autonomous vehicles researchers are aiming for is real-life behavior in those simulations. When machine learning is applied first to the game engine, it creates better gaming experiences. Our vehicles look way more realistic thanks to the development of this kind of technology.

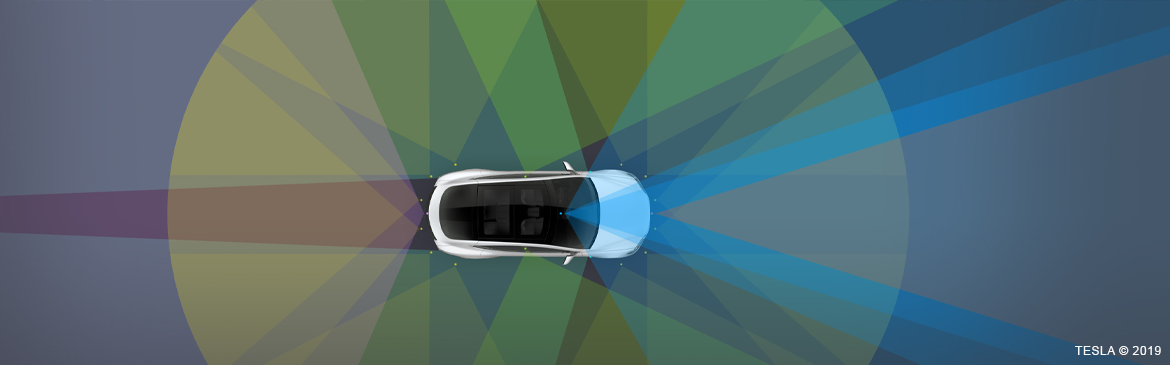

You've mentioned that training cars in games has the potential to help autonomous cars in the real world. Right now various companies like Waymo and Tesla seem to be trying to nail down vision with LiDAR, ultrasonic sensors, and cameras. What would data from videogames add to the mix?

When we're talking about autonomous transportation, it's not only autonomous cars. It can be flight too. When you think about any autonomous vehicle, you have three layers of challenges. First, you have to manage the path -- the path from point A to point B. It might sound trivial for cars, since we have roads. For cases like drones it's more complicated, especially when you have many drones in the same area. Second, is the trajectory, which is the act of driving or flying itself. That's where most of the research is being made today. Finally, there’s the perception of the world itself like environment, including other vehicles and pedestrians.

For the third challenge, most companies are using complex and expensive sensors. The issue with that it is significantly difficult to democratize autonomous vehicles, even if we solved the challenges of path and trajectory. Tesla, for example, is using a very different approach by using computer vision to get an accurate representation of the world primarily using cameras around the vehicle. From my knowledge, they haven't solved this computer vision challenge yet, but if it works at some point then it's a winner-takes-all situation. Suddenly, autonomous vehicles become way more accessible to many types of people and many kinds of applications.

Now that we understand that there are many ways to solve this problem of representing the world surrounding the vehicle, how do we test the efficiency of the data captured? Most of the time when you want to test the efficiency of AI, you compare the prediction with what's called the “ground truth.” Obviously the best AIs are the ones that are extremely close to the ground truth. Using our simulated worlds is one of the most efficient ways to set a specific ground truth scenario. If you want to evaluate what a car perceives of a pedestrian in various situations, you can simulate the information that captors provide to the AI, from various types or quality, what information LiDAR would provide, and how the car would behave with a noisy view, for example. You can even reproduce rare conditions, like storms and capture failures. Since you know the ground truth of the simulation that's made in a game engine, you can audit the real-life AI of an autonomous vehicle.

You've also mentioned that AI use in games could possibly help healthcare. How would that work?

I have two very concrete examples in mind. First, for some mental diseases, traditional psychotherapies do not work. With schizophrenia, one of the techniques is to have the patient confront his or her inner voices, interact with them, and learn how to fight them. This is an example of mechanics that the gaming industry has mastered for years. The interesting part is that at this point, we share the same challenges, but for different purposes. Both the medical and gaming domains want avatars to be more relatable. They both want to understand the psychological mechanisms that are important and not so important to make the avatars more believable. They both want to understand how to better implement them for real-time interaction. So as a gaming company, that’s one of the things that we're discussing with healthcare researchers.

Another example has to do with motion capture. The technology of placing numerous sensors on a person and capturing their movement with cameras comes from the medical world. The initial purpose was to capture movement in order to track physical conditions. The gaming industry has been using this technology to create more compelling animations for years. Thus we've created tons and tons of data. We've also created AI-fueled tools to manage this data, like search engines that analyze the movement and data itself, not the descriptions that were manually entered and full of errors. Such technologies could be applied to healthcare to assist in motion analysis and accelerate diagnosis. That's another example of the technology we've been discussing with healthcare leaders.

Do you see AI and machine learning getting to the point where it's almost too good at creating accurate and realistic behaviors, perhaps requiring game creators to tone it down in the name of entertainment?

I think that's the case now. Bots are very specific AI agents. Players sometimes call Non-Playable Characters “Bots”. In reality Bots are specific AI designed to mimic the behavior of a real human. We created bots to automatically test different impacts in a game, like when you introduce a new feature or a new character. The objective of those bots was to find bugs and balancing issues.

When we found we had such efficient bots, it was extremely tempting to try and play against them. What we found was that it was extremely boring opponents. We created these bots for maximum efficiency, not to be fun and not to simply be challenging. It's interesting from a research standpoint since we're working on parameters that make the bots fun to play against. We're doing things like adding delays to reactions instead of having them be instantaneous and adding some randomness to the bots' actions, and still we've found that it's not enough. We're working on finding ways to recreate a real, human play style. It involves many complex things in terms of mechanics and how to make them learn to be fun.

Gaming communities and seemingly more and more of the Internet are known for having toxic communities. What solutions can be achieved through AI and machine learning?

That's very, very difficult. Obviously we all want to have safe and caring communities, whether it's in gaming or in general. As parents, we can't accept cyber-intimidation. The problem is that we have limited control in what's going on in those various communities. We've worked on this and discovered that one important lead is to detect the very first weak signals of toxicity before it manifests into something larger. It can be something as simple as a shift in the tone people use in forums. It can be a change in the frequency of threads. It can be a change in the typical post length of specific topics. These can all be indications that the tone is changing. When the tone within a community is set and generalized, it's much more difficult to address and to influence.

Two years ago we did some research at the beginning of a game launch. We wanted to find the metrics that characterizes a community at its inception. We wanted to see if some actions on our end, such as changing the rules of the metagame, could influence the metrics. The results were positive. We were able to influence the general tone of the community, but we also discovered that there are some really complex questions and a lot of research to be done in order to solve this problem. With this specific project, we worked with a professor of communications. She was able to better understand how communities are forming and what's their inception dynamics. She's now using the learnings from the project to help prevent cyber-intimidation in schools.

La Forge has been a fascinating initiative that bridges academia and game development. What achievements of the collaboration are you most proud of?

I wouldn't say that it was a specific project, but rather the change in mindsets Ubisoft La Forge has generated. We wanted to bridge sides that traditionally haven't collaborated well. It's extremely difficult to ask people to consider alternative points of view when they're used to recipes that have worked well for them to this day. Think about traditional fossil fuels and cars that use fossil fuels -- it's extremely difficult to grow out of them because they work well today.

It's difficult and risky to create a successful game. One of the things I'm very proud of is that some extremely talented people came to Ubisoft La Forge to work in a very collaborative manner and creative manners that has influenced the way we make games. The collaboration has also influenced academic research and applications in other domains, like what we discussed earlier with healthcare. My greatest pride is that Ubisoft La Forge is recognized by the gaming industry, academic leaders, and also experts from other domains.

Commit-Assistant seems particularly impressive, with the potential of revolutionizing game debugging. Would you describe the process of how that started with La Forge and turned into a tool used by Ubisoft developers?

It all started last year with a PhD student named Mathieu Nayrolles. He was at Concordia University and needed some real-life data in order to finish his PhD. He was working on a system that learns from past code submission. A code submission is a bunch of lines of program that's put into a common repository that is used to build the game. His idea was to use AI to determine if a new piece of code in the same scope would result in a bug or not. He wanted to create an AI that used past coding and past mistakes in order to predict new mistakes that would be made.

It's a very difficult problem. First, two programmers will never program exactly the same way. You need to translate their code into a common representation. The other issue is that bugs solved or described with sentences, and sentences can lack precision. One person will see a bug and say, "The animation doesn't behave properly," and another will say, "The animation feels weird." How do you translate that into an actual problem that involves a specific piece of code?

We decided to open up ten years of game programming at Ubisoft La Forge and granted Mathieu access. After his research and PhD, Ubisoft hired him to lead an initiative to improve our programming pipelines. As an example, Rainbow Six programming lead Nicolas Fleury is pioneering this and made a presentation with Mathieu at CppCon (https://youtu.be/QDvic0QNtOY). The team that Mathieu is leading is slowly expanding to aid other Ubisoft productions.

You're very positive on what AI and machine learning can do for game development, game communities, and humanity in general. By contrast, Elon Musk said, "AI is far more dangerous than nukes," and wonders why it isn't more regulated. What do you make of his opinion?

To me, AI should not be compared to a nuclear weapon. If anything, it should be compared to nuclear energy. AI is a tool. The outcome is really up to us, whether we use AI to make something as destructive as a nuke or use it to create something beneficial.

Should we regulate the tool? I'm not sure. We should think more broadly about the kind of society we want. The hype about AI only underlines questions about privacy, security, and fairness. AI doesn't make those problems, but all those questions are important. Those questions aren't related to AI, but AI simply stresses them. Regulating AI might be the solution. I don't know, but I'm sure it's not the "silver bullet" some people want. Regulations on technology are often slow and quickly obsolete. We have to change our behavior first.

In the last year, many people became aware of machine learning and AI through naughty deepfakes videos and more recently the use of deepfakes in the X-Men: Red comics. Does it concern or worry you that some people have a negative perception of advanced AI due to how it's portrayed in the media and entertainment?

If it helps people understand that they should be critical with information presented, then it’s a positive. We have to remember that AI is a powerful tool that serves the best and the worst intentions. The more people are aware of it’s abilities and what AI and this technology can do, the more they will want to verify the truth by having multiple sources of information.

I understand that you have a doctorate in particle physics. Any chance your old friends at CERN will let you borrow the Large Hadron Collider to help Ubisoft's creative efforts? It's not going to be doing anything for two years.

[Laughs] A-ha! We have explored many concepts. We thought of a protons race, but we're not sure the concept would be a bang, actually. [Laughs] We also thought up the concept of a shooter called Schrodinger's Challenge. It involved a cat and got mixed reviews. [Laughs]